computer architecture

Computer architecture is a set of rules and methods that describe the functionality, organization, and implementation of computer systems. Some definitions of architecture define it as describing the capabilities and programming model of a computer but not a particular implementation. In other definitions computer architecture involves instruction set architecture design, microarchitecture design, logic design, and implementation.

The first documented computer architecture was in the correspondence between Charles Babbage and Ada Lovelace, describing the analytical engine. When building the computer Z1 in 1936, Konrad Zuse described in two patent applications for his future projects that machine instructions could be stored in the same storage used for data, i.e. the stored-program concept. Two other early and important examples are:

- John von Neumann‘s 1945 paper, First Draft of a Report on the EDVAC, which described an organization of logical elements;

- Alan Turing‘s more detailed Proposed Electronic Calculator for the Automatic Computing Engine, also 1945 and which cited John von Neumann‘s paper.

The term architecture in computer literature can be traced to the work of Lyle R. Johnson, Frederick P. Brooks, Jr., and Mohammad Usman Khan, all members of the Machine Organization department in IBM’s main research center in 1959. Johnson had the opportunity to write a proprietary research communication about the Stretch, an IBM-developed supercomputer for Los Alamos National Laboratory (at the time known as Los Alamos Scientific Laboratory). To describe the level of detail for discussing the luxuriously embellished computer, he noted that his description of formats, instruction types, hardware parameters, and speed enhancements were at the level of system architecture – a term that seemed more useful than machine organization.

The discipline of computer architecture has three main subcategories:

- Instruction Set Architecture, or ISA. The ISA defines the machine code that a processor reads and acts upon as well as the word size, memory address modes, processor registers, and data type.

- Microarchitecture, or computer organization describes how a particular processor will implement the ISA. The size of a computer’s CPU cache for instance, is an issue that generally has nothing to do with the ISA.

- System Design includes all of the other hardware components within a computing system. These include:

- Data processing other than the CPU, such as direct memory access (DMA)

- Other issues such as virtualization, multiprocessing, and software features.

There are other types of computer architecture. The following types are used in bigger companies like Intel, and count for 1% of all of computer architecture.

- Macroarchitecture: architectural layers more abstract than microarchitecture.

- Assembly Instruction Set Architecture (ISA): A smart assembler may convert an abstract assembly language common to a group of machines into slightly different machine language for different implementations.

- Programmer Visible Macroarchitecture: higher level language tools such as compilers may define a consistent interface or contract to programmers using them, abstracting differences between underlying ISA, UISA, and microarchitectures. E.g. the C, C++, or Java standards define different Programmer Visible Macroarchitecture.

- UISA (Microcode Instruction Set Architecture): a group of machines with different hardware level microarchitectures may share a common microcode architecture, and hence a UISA.

- Pin Architecture: The hardware functions that a microprocessor should provide to a hardware platform, e.g., the x86 pins A20M, FERR/IGNNE or FLUSH. Also, messages that the processor should emit so that external caches can be invalidated (emptied). Pin architecture functions are more flexible than ISA functions because external hardware can adapt to new encodings, or change from a pin to a message. The term architecture fits, because the functions must be provided for compatible systems, even if the detailed method changes.

The purpose is to design a computer that maximizes performance while keeping power consumption in check, costs low relative to the amount of expected performance, and is also very reliable. For this, many aspects are to be considered which includes instruction set design, functional organization, logic design, and implementation. The implementation involves integrated circuit design, packaging, power, and cooling. Optimization of the design requires familiarity with compilers, operating systems to logic design, and packaging.

INSTRUCTION SET ARCHITECTURE

An instruction set architecture (ISA) is the interface between the computer’s software and hardware and also can be viewed as the programmer’s view of the machine. Computers do not understand high level languages such as Java, C++, or most programming languages used. A processor only understands instructions encoded in some numerical fashion, usually as binary numbers. Software tools, such as compilers, translate those high level languages into instructions that the processor can understand.

Besides instructions, the ISA defines items in the computer that are available to a program—e.g. data types, registers, addressing modes, and memory. Instructions locate these available items with register indexes (or names) and memory addressing modes.

The ISA of a computer is usually described in a small instruction manual, which describes how the instructions are encoded. Also, it may define short (vaguely) mnemonic names for the instructions. The names can be recognized by a software development tool called an assembler. An assembler is a computer program that translates a human-readable form of the ISA into a computer-readable form. Disassemblers are also widely available, usually in debuggers and software programs to isolate and correct malfunctions in binary computer programs.

ISAs vary in quality and completeness. A good ISA compromises between programmer convenience (how easy the code is to understand), size of the code (how much code is required to do a specific action), cost of the computer to interpret the instructions (more complexity means more space needed to disassemble the instructions), and speed of the computer (with larger disassemblers comes longer disassemble time). For example, single-instruction ISAs like an ISA that subtracts one from a value and if the value is zero then the value returns to a higher value are both inexpensive, and fast, however ISAs like that are not convenient or helpful when looking at the size of the ISA. Memory organization defines how instructions interact with the memory, and how memory interacts with itself.

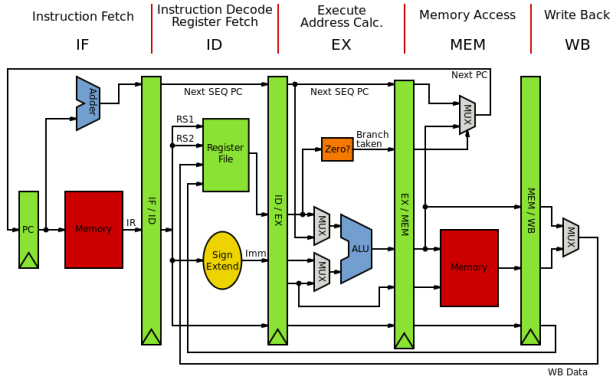

COMPUTER ORGANIZATION

Computer organization helps optimize performance-based products. For example, software engineers need to know the processing power of processors. They may need to optimize software in order to gain the most performance for the lowest price. This can require quite detailed analysis of the computer’s organization. For example, in a SD card, the designers might need to arrange the card so that the most data can be processed in the fastest possible way.

Computer organization also helps plan the selection of a processor for a particular project. Multimedia projects may need very rapid data access, while virtual machines may need fast interrupts. Sometimes certain tasks need additional components as well. For example, a computer capable of running a virtual machine needs virtual memory hardware so that the memory of different virtual computers can be kept separated. Computer organization and features also affect power consumption and processor cost.

IMPLEMENTATION

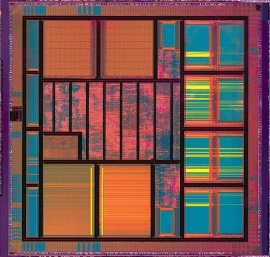

Once an instruction set and micro-architecture are designed, a practical machine must be developed. This design process is called the implementation. Implementation is usually not considered architectural design, but rather hardware design engineering. Implementation can be further broken down into several steps:

- Logic Implementation designs the circuits required at a logic gate level

- Circuit Implementation does transistor-level designs of basic elements (gates, multiplexers, latches etc.) as well as of some larger blocks (ALUs, caches etc.) that may be implemented at the log gate level, or even at the physical level if the design calls for it.

- Physical Implementation draws physical circuits. The different circuit components are placed in a chip floorplan or on a board and the wires connecting them are created.

- Design Validation tests the computer as a whole to see if it works in all situations and all timings. Once the design validation process starts, the design at the logic level are tested using logic emulators. However, this is usually too slow to run realistic test. So, after making corrections based on the first test, prototypes are constructed using Field-Programmable Gate-Arrays (FPGAs). Most hobby projects stop at this stage. The final step is to test prototype integrated circuits. Integrated circuits may require several redesigns to fix problems.

computer engineering

Computer engineering is a discipline that integrates several fields of electrical engineering and computer science required to develop computer hardware and software. Computer engineers usually have training in electronic engineering (or electrical engineering), software design, and hardware–software integration instead of only software engineering or electronic engineering. Computer engineers are involved in many hardware and software aspects of computing, from the design of individual microcontrollers, microprocessors, personal computers, and supercomputers, to circuit design. This field of engineering not only focuses on how computer systems themselves work, but also how they integrate into the larger picture.

Usual tasks involving computer engineers include writing software and firmware for

embedded microcontrollers, designing VLSI chips, designing analog sensors, designing mixed signal circuit boards, and designing operating systems. Computer engineers are also suited for robotics research, which relies heavily on using digital systems to control and monitor electrical systems like motors, communications, and sensors. In many institutions, computer engineering students are allowed to choose areas of in-depth study in their junior and senior year, because the full breadth of knowledge used in the design and application of computers is beyond the scope of an undergraduate degree. Other institutions may require engineering students to complete one or two years of General Engineering before declaring computer engineering as their primary focus.

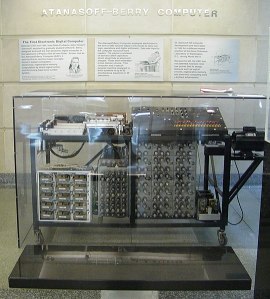

Computer engineering began in 1939 when John Vincent Atanasoff and Clifford Berry began developing the worlds first electronic digital computer

through physics, mathematics, and electrical engineering. John Vincent Atanasoff was once a physics and mathematics teacher for Iowa State University and Clifford Berry a former graduate under electrical engineering and physics. Together, they created the Atanasoff-Berry computer, also known as the ABC which took 5 years to complete. While the original ABC was dismantled and discarded in the 1940’s a tribute was made to the late inventors, a replica of the ABC was made in 1997 where it took a team of researchers and engineers four years and $350,000 to build.

The first computer engineering degree program in the United States was established in 1972 at Case Western Reserve University in Cleveland, Ohio. As of 2015, there were 250 ABET-accredited computer engineering programs in the US. In Europe, accreditation of computer engineering schools is done by a variety of agencies part of the EQANIE network. Due to increasing job requirements for engineers who can concurrently design hardware, software, firmware, and manage all forms of computer systems used in industry, some tertiary institutions around the world offer a bachelor’s degree generally called computer engineering. Both computer engineering and electronic engineering programs include analog and digital circuit design in their curriculum. As with most engineering disciplines, having a sound knowledge of mathematics and science is necessary for computer engineers.

There are two major specialties in computer engineering: hardware and software.

COMPUTER HARDWARE ENGINEERING

Most computer hardware engineers research, develop, design, and test various computer equipment. This can range from circuit boards and microprocessors to routers. Some update existing computer equipment to be more efficient and work with newer software. Most computer hardware engineers work in research laboratories and high-tech manufacturing firms. Some also work for the federal government. According to BLS (Bureau of Labor Statistics), 95% of computer hardware engineers work in metropolitan areas. They generally work full-time. Approximately 33% of their work requires more than 40 hours a week. For example the typical computer hardware engineer with a bachelors degree as of 2015 makes 111,730 USD annually and a hourly pay of 53.72 USD. The expected ten year growth as of 2014 for computer hardware engineering was an estimated three percent and there was an total of 77,700 jobs that same year.

COMPUTER SOFTWARE ENGINEERING

Computer software engineers develop, design, and test software. They construct, and maintain computer programs, as well as set up networks such as intranets for companies. Software engineers can also design or code new applications to meet the needs of a business or individual. Some software engineers work independently as freelancers and sell their software products/applications to an enterprise or individual. A computer software engineer with a bachelors degree as of 2015 makes 100,690 USD annually and a hourly rate of 48.41 USD. The expected ten year growth as of 2014 for computer software engineering was an estimated seventeen percent and there was a total of 1,114,000 jobs that same year.